publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

Thesis

-

Towards Physically Reliable and User-Friendly Robot ManipulationJoonhyung Lee

Towards Physically Reliable and User-Friendly Robot ManipulationJoonhyung LeeRobotic systems have become increasingly embedded in various industries and manufacturing processes, expanding their applications into everyday life. However, deploying robots in unstructured environments often requires human-like reasoning for appropriate decision-making and behavior.Recent advances in foundation models have opened new possibilities for enhancing robotic manipulation capabilities and offer a potential solution to bridge the gap between robotic systems and human-like reasoning. In this paper, we propose two types of foundation model-based robotic manipulation methods: semi-autonomous teleoperation and preference learning. First, we propose a method that combines physics-based simulation and semantic reasoning using foundation models. Our approach aims to enhance object placement tasks in complex environments based on scene information and task description. Secondly, we introduce the problem of extracting human preferences from visual observations. To this end, our method describes differences between consecutive images and incorporates texts with image sequences.

Publications

-

Quality-Diversity based Semi-Autonomous Teleoperation using Reinforcement LearningSangbeom Park , Taerim Yoon , Joonhyung Lee , and 2 more authorsNeural Networks, 2024

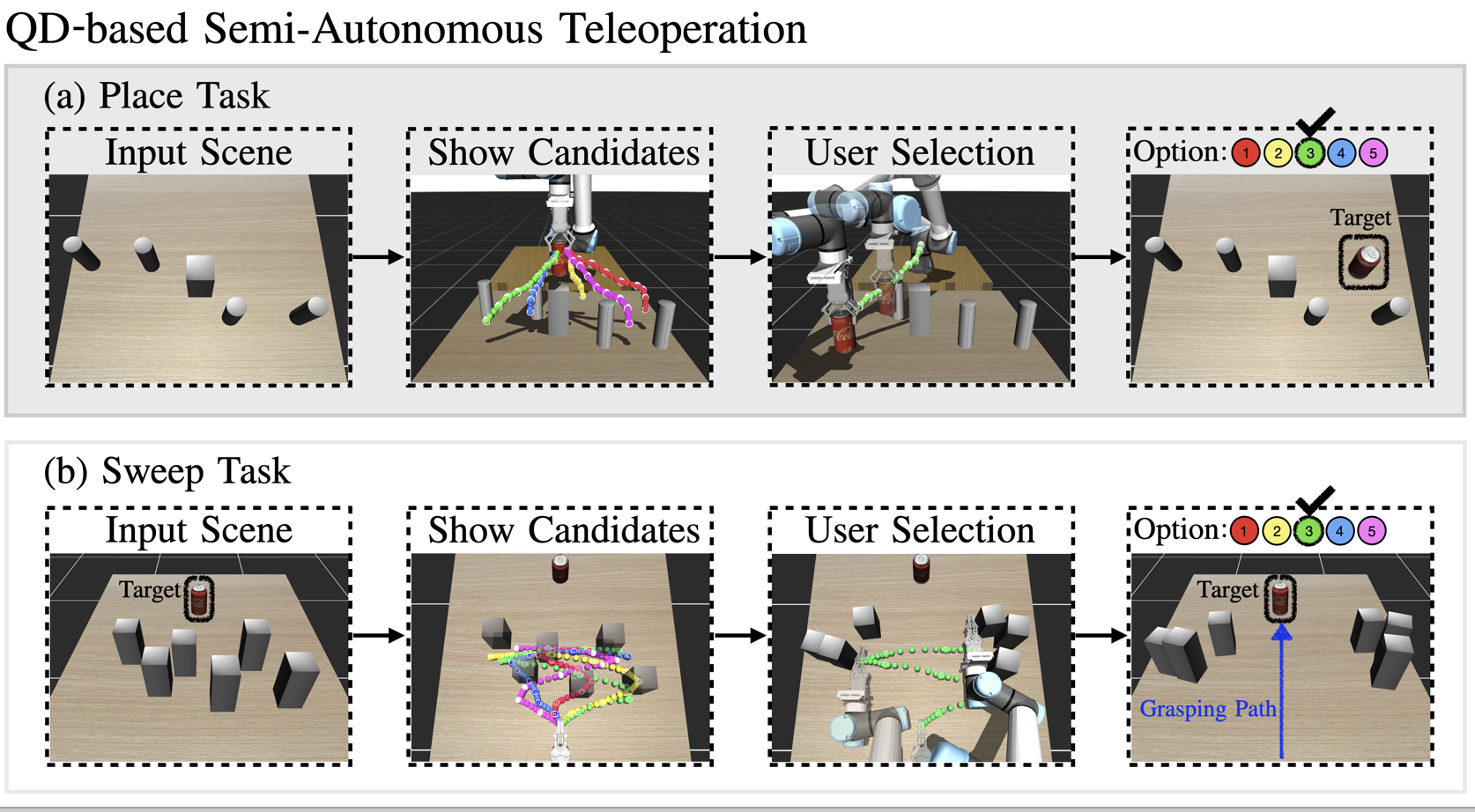

Quality-Diversity based Semi-Autonomous Teleoperation using Reinforcement LearningSangbeom Park , Taerim Yoon , Joonhyung Lee , and 2 more authorsNeural Networks, 2024Recent successes in robot learning have significantly enhanced autonomous systems across a wide range of tasks. However, they are prone to generate similar or the same solutions, limiting the controllability of the robot to behave according to user intentions. These limited robot behaviors may lead to collisions and potential harm to humans. To resolve these limitations, we introduce a semi-autonomous teleoperation framework that enables users to operate a robot by selecting a high-level command, referred to as option. Our approach aims to provide effective and diverse options by a learned policy, thereby enhancing the efficiency of the proposed framework. In this work, we propose a quality-diversity (QD) based sampling method that simultaneously optimizes both the quality and diversity of options using reinforcement learning (RL). Additionally, we present a mixture of latent variable models to learn multiple policy distributions defined as options. In experiments, we show that the proposed method achieves superior performance in terms of the success rate and diversity of the options in simulation environments. We further demonstrate that our method outperforms manual keyboard control for time duration over cluttered real-world environments.

-

Visual Preference Inference: An Image Sequence-Based Preference Reasoning in Tabletop Object ManipulationJoonhyung Lee , Sangbeom Park , Yongin Kwon , and 3 more authorsIEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024

Visual Preference Inference: An Image Sequence-Based Preference Reasoning in Tabletop Object ManipulationJoonhyung Lee , Sangbeom Park , Yongin Kwon , and 3 more authorsIEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024In robotic object manipulation, human preferences can often be influenced by the visual attributes of objects, such as color and shape. These properties play a crucial role in operating a robot to interact with objects and align with human intention. In this paper, we focus on the problem of inferring underlying human preferences from a sequence of raw visual observations in tabletop manipulation environments with a variety of object types, named Visual Preference Inference (VPI). To facilitate visual reasoning in the context of manipulation, we introduce the Chain-of-Visual-Residuals (CoVR) method. CoVR employs a prompting mechanism that describes the difference between the consecutive images (i.e., visual residuals) and incorporates such texts with a sequence of images to infer the user’s preference. This approach significantly enhances the ability to understand and adapt to dynamic changes in its visual environment during manipulation tasks. Furthermore, we incorporate such texts along with a sequence of images to infer the user’s preferences. Our method outperforms baseline methods in terms of extracting human preferences from visual sequences in both simulation and real-world environments. Code and videos are available at: \hrefhttps://joonhyung-lee.github.io/vpi/https://joonhyung-lee.github.io/vpi/

-

SPOTS: Stable Placement of Objects with Reasoning in Semi-Autonomous Teleoperation SystemsJoonhyung Lee , Sangbeom Park , Jeongeun Park , and 2 more authorsIEEE International Conference on Robotics and Automation (ICRA), 2024

SPOTS: Stable Placement of Objects with Reasoning in Semi-Autonomous Teleoperation SystemsJoonhyung Lee , Sangbeom Park , Jeongeun Park , and 2 more authorsIEEE International Conference on Robotics and Automation (ICRA), 2024Pick-and-place is one of the fundamental tasks in robotics research. However, the attention has been mostly focused on the “pick” task, leaving the “place” task relatively unexplored. In this paper, we address the problem of placing objects in the context of a teleoperation framework. Particularly, we focus on two aspects of the place task: stability robustness and contextual reasonableness of object placements. Our proposed method combines simulation-driven physical stability verification via real-to-sim and the semantic reasoning capability of large language models. In other words, given place context information (e.g., user preferences, object to place, and current scene information), our proposed method outputs a probability distribution over the possible placement candidates, considering the robustness and reasonableness of the place task. Our proposed method is extensively evaluated in two simulation and one real world environments and we show that our method can greatly increase the physical plausibility of the placement as well as contextual soundness while considering user preferences.

-

CLARA: Classifying and Disambiguating User Commands for Reliable Interactive Robotic AgentsJeongeun Park , Seungwon Lim , Joonhyung Lee , and 4 more authorsIEEE Robotics and Automation Letters (RA-L), 2023

CLARA: Classifying and Disambiguating User Commands for Reliable Interactive Robotic AgentsJeongeun Park , Seungwon Lim , Joonhyung Lee , and 4 more authorsIEEE Robotics and Automation Letters (RA-L), 2023In this paper, we focus on inferring whether the given user command is clear, ambiguous, or infeasible in the context of interactive robotic agents utilizing large language models (LLMs). To tackle this problem, we first present an uncertainty estimation method for LLMs to classify whether the command is certain (i.e., clear) or not (i.e., ambiguous or infeasible). Once the command is classified as uncertain, we further distinguish it between ambiguous or infeasible commands leveraging LLMs with situational aware context in a zero-shot manner. For ambiguous commands, we disambiguate the command by interacting with users via question generation with LLMs. We believe that proper recognition of the given commands could lead to a decrease in malfunction and undesired actions of the robot, enhancing the reliability of interactive robot agents. We present a dataset for robotic situational awareness, consisting pair of high-level commands, scene descriptions, and labels of command type (i.e., clear, ambiguous, or infeasible). We validate the proposed method on the collected dataset, pick-and-place tabletop simulation. Finally, we demonstrate the proposed approach in real-world human-robot interaction experiments, i.e., handover scenarios.

-

Robust Detection for Autonomous Elevator Boarding Using a Mobile ManipulatorSeungyoun Shin , Joonhyung Lee , Junhyug Noh , and 1 more authorAsian Conference on Pattern Recognition (ACPR), 2023

Robust Detection for Autonomous Elevator Boarding Using a Mobile ManipulatorSeungyoun Shin , Joonhyung Lee , Junhyug Noh , and 1 more authorAsian Conference on Pattern Recognition (ACPR), 2023Indoor robots are becoming increasingly prevalent across a range of sectors, but the challenge of navigating multi-level structures through elevators remains largely uncharted. For a robot to operate successfully, it’s pivotal to have an accurate perception of elevator states. This paper presents a robust robotic system, tailored to interact adeptly with elevators by discerning their status, actuating buttons, and boarding seamlessly. Given the inherent issues of class imbalance and limited data, we utilize the YOLOv7 model and adopt specific strategies to counteract the potential decline in object detection performance. Our method effectively confronts the class imbalance and label dependency observed in real-world datasets, Our method effectively confronts the class imbalance and label dependency observed in real-world datasets, offering a promising approach to improve indoor robotic navigation systems.